components

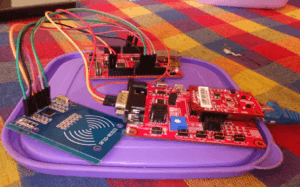

Hardware Components

WIZnet WIZ750SR

X 1

RFID Module (Generic) RC522

X 1

RJ45 Patch Cord CAT5 Ethernet LAN Network Patch Cable

X 1

TP-Link TL-WR841N 300Mbps Wireless-N Router

X 1

Amazon Alexa Echo Dot

X 1

Ultrasonic Sensor - HC-SR04 (Generic)

X 1

Software Apps and online services

ThingSpeak API details

Basic Objective

- The basic objective of the project is to build an autonomous robot that would guide new students, admits or visitors throughout the campus of their college, university or organization. It would take the students throughout the campus and would tell them about each and every block, specializations offered and the most happening places in campus. We would be using echo to achieve our goal for NLP and OpenCV for object detection and avoidance.

- WizWiki-W7500 and WIZ750SR are used for authentication and activation purposes. The robot could only be activated using authentic RFID tags and activated based on the result. Also, we would be using a router that would support the data transmission. We would be using the MQTT protocol for distribution of telemetry messages to the client. The RFID tags would also be customized to keep destination centric information which would enable the robot to go to the desired spot.

This project is divided into three modules.

MODULE I:

CONFIGURATION OF AMAZON ECHO AND ALEXA VOICE SERVICES :-

- Implementing basic AWS lambda functions along with coding for alexa skill sets.

- Working on the implementation of AWS SSML and IoT.

- Implementation of the Node Red and communication using MQTT protocol or through HTTP.

- Creating the intents for the Alexa Skill sets.

- Handing the user’s speech recognization and turning it into the tokens identifying the “intents” and any associated contextual parameters.

USER COMMMANDS:-

- WhatsMyBlockIntent what’s my suitable block

- WhatsMyBlockIntent what is my block

- WhatsMyBlockIntent what’s my block

- WhatsMyBlockIntent what is my block

- WhatsMyBlockIntent my block

- WhatsMyBlockIntent my favorite block

- WhatsMyBlockIntent get my block

Here “WhatsMyBlockIntent” Represents the basic indentation required for JavaScript Object notations. The List of Subjects Listed for the purpose of making Echo understand as to which block it needs to go to is as follows:-

- Electronics

- Electrical

- Mechanical

- Computers

- Civil

MODULE II:

CONNECTING WIZ750SR MODULE WITH WIZWIKI-W7500 :-

- Receiving data values from RFID tags when tapped in RFID reader connected with WizWiki-W7500.

- Connecting WIZ750SR with WizWiki-W7500 through RS232 module.

- Sending data values received on WIZ750SR to remote cloud server ThingSpeak.

- Making User Interface which will control ECHOTRON wirelessely.

- Receiving data from ThingSpeak cloud and getting the value on WIZWIKI-7500 module installed on ECHOTRON.

MODULE III:

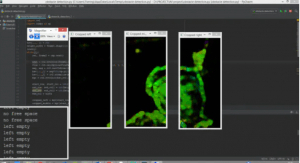

OBSTACLE DETECTION AND AVOIDANCE USING OPENCV :-

- Since ECHOTRON is an autonomous robot, computer vision is one of the important component of this project. The ability to see the environment in order to avoid obstacle is basic strategy of this module.

- Using concepts such as optical flow, sub conversion in order to keep track of obstacle on the way of ECHOTRON.

OPTICAL FLOW:-

- Optical flow is the pattern of apparent motion of image objects between two consecutive frames caused by the movement of object or camera.

- It is 2D vector field where each vector is a displacement vector showing the movement of points from first frame to second.

- We will use functions like cv2.calcOpticalFlowPyrLK() to track feature points in a video.

COMMENTS